KEYframe

Wednesday, October 11, 2006

New controll object

The old multi-modal resistor-based controll object whas abit to big to move around,

and was also depending on connectrions on two points

Ideally I wanted to reduce all physical objects to one single object.

This latest object is a step in that direction.

The cv-jit library of maxMSP can count amount of light-points, and send messages for

changes in graphics to max

New portable version

Going to trondheim this weeekend for nordic music technology days (NOMUTE) + Matchmaking

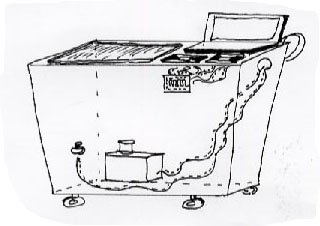

Had to make a portable version of my table

Photo shows how I have attached the legs to two tables. Camera is on a stick down from the table.

I also had to make a universal video-projector stand beacuse I dont know what kind they have in trondheim

Monday, June 19, 2006

Concept III, Image Schemata

Image Schemata is a term from cognitive psychology

we organize our impressions accourding patterns

which is founded in the experience of our own

bodies in interaction with the world.

”…..embodied patterns of meaningfully

organized experience, such as structures of

bodily movement, and perceptual interactions..”

”…structures which our experiances must fit if

it is to be coherent and comprehensable..”

(Mark Johnson)

Container

blockage

Path

Cycle

Part/whole

Full/empty

Surface

ballance

Attraction

Link

Near-far

object

Center-periphery

Inside/ Outside

Early ideas for hollow central object which could detect RFID-tagged objects inserted in a central object. This could involve marbles representing eather soundfile change or effect-adding. The system would involve a wireless RFID-reader.

Consept II, Keyframe- interpolation

Keyframe- interpolation extention of animation terminology

The system has a double interpolation.

Interpolation between contrasting settings within one effect, and interpolation in time

Taking a few contrasting musical situations, represented by

a graphical object, and interpolating between the situations in time.

The system offers both graphical and auditive feedback and antisipation in the playback moment, and offers the user a creative feedback for further idea-generation

/ Interpolation as grenerative principle in time-based art.

This simple, but powerfool technique creates variation and consistency.

An attractive principle in time-based art, like music

Musical objects implementation

My overall aim has been to create a graphical envirement which gives a more intuitive impression of the various properties of each sound and the relation to other sounds.

The graphics should not depict the sound itself (which is an abstrack unspeakable entity), but the controll parameters assigned to the sound

The objects represents a sound with indication of strength, location in space,

location in time and various effectsettings (tentacles)

Multidimentional anaalysis

To solve this problem I have drawn upon experiences from multidimentional

representation in music and design research,

Plotting qualities of sound in a three-axis system. Each axis being a dicotonymic set of sound metaphores.

This multidimentional representation-techniques give a good overview of the subject and an easy access to comparitative analysis.

Multi-sensory modal Cube

Due to weak IR-light results and focus problems of hacked camera, I decided to move my attention to a secondary multi-senory object.

Meassuring various degrees of resistance the software triggers various modal interfaces. The resistor-meassuring is done with an arduino-chip, which sends ist results to Max/MSP through the serial object.

I also experimented with tactility to solve the problem of multi-point attention

By adding various surface qualities(spunge, sandpaper etc) the user can keep his focus on the screen an recognize the various modes through the tactile quality (The metal points for the 3 resistors is on the opposite sides of the three collours)

Technical developement of tracking and modal shifting.

I planned to do the tracking with jitter/cv-jit

I was going to send out infra-red light from the physical objects to seperate the graphics of the screen from the information from the physucal object.

I did some experiments with removing the IR filter from ordinary web-cams.

I wanted to have all functions could being handled by one central physical object

I imagined a geometrical object which could be tipped from side to side, triggering the 3 modes and handeling both position and rotation.

By combining triangular shapes and various nr of light diodes I would both take care of rotation, position and modal issues.

Example of drilling triangular shapes into accrylic surfaces. By sending horisontal light through the plate thedrilled triangle is illuminated. (lightning sollution Stig Skjellvik)

Purpose

Structuring and simplification of the hetrogenic, modal style of traditional music-softeware.

Offering creative feedback through the system-design to battle the overwhelming technical possibilities of modern music-software.

Multimodal antisipation and experience of the sound and its controll-parameters. Individually and in relative to oother soundfiles

Thursday, March 16, 2006

FINAL PROJECT - research into existing table-projects

Here are some links to table-projects(musical)

- Audiopad:

- Audiopad (PDF) composition and performance instrument for electronic music which tracks the positions of objects on a tabletop surface and converts their motion into music.

To determine the position of the RF tag on a two dimensional surface, they use a modified version of the sensing apparatus found in the Zowie Ellies Enchanted Garden playset To move the characters on-screen, children simply move them on the playset.

- Sensetable: A system that wirelessly tracks the positions of multiple objects on a flat display surface quickly and accurately.(similar to audiopad, but without sound.)

- Multi-Touch InteractionResearch(Jefferson Y. Han)(WEB)

- Low-Cost Multi-Touch Sensing through Frustrated Total Internal Reflection (PDF)

Camera based (need password)

- Lemur: unique and patented touchscreen technology that can track multiple fingers simultaneously.

- The maual input sesions (Golan Levin) (web)(pdf)

A series of audiovisual

vignettes which probe the expressive possibilities of free-form

hand gestures. Performed on a hybrid projection system which

combines a traditional analog overhead projector and a digital PC

video projector, the vision-based software instruments generate

dynamic sounds and graphics solely in response to the forms and

movements of the silhouette contours of the user’s hands.

- The Audiovisual Environment Suite (AVES) is a set of five interactive systems which allow people to create and perform abstract animation and synthetic sound in real time.

- Heavy rotation revisor: Input from five radio stations is analyzed, cut up into abstract fragments and thrown onto the desk. By using the mouse and headphones, the players can listen to the fragments, combine them and create abstract sound scapes.

- DiamondTouch (WEB)

- DiamondTouch (PDF): A Multi-User Touch Technology

A technique for creating touch sensitive surfaces is proposed which allows multiple,

simultaneous users to interact in an intuitive fashion. Touch location information is

determined independently for each user, allowing each touch on the common surface to

be associated with a particular user.

Antenna based

Thursday, March 02, 2006

WORKSHOP Prosessing Atelier Nord

View this clip on Vimeo

Prosessing is a graphical tool in some javarelated language

I attended the prosessing workshop to be able to build a physical music-editor with extended soundfile representation.

Here are some comments to the qt-popup-meny chapters

(down right corner)

#1-#5 Basic shapes and drawing

#6 Calculating objects on another circle using cos and sin

#7-8 Controlling objects position, size, and movement with osc-communication from max/msp (in real-time))

#9-10 Experimenting with multiple shapes for soundfile representation, containing info about different prosessing paramenters(tentancles), voulme(size), sterio-panning(rotation)

#11-15 Creating multiple dots with arrays. Senario for soundfiles in time/amplitude-domain. By interaction of physical objects certain soundfiles will be highlighted for playback or edit

#16-17 Soundfile with an indicated length(line)

#18 Cursor experiments

Wednesday, March 01, 2006

TASK3 Touchable Services

View this clip on Vimeo

Using RFID tags in an urban envirement for retrieving url-links via a RFID-reader attached to a standard Mobile phone.

The RFID is a small/cheap tag that may contain a small amount of information, not requireing any power supply. They might contain an id-number, refering to a databasis, or an URL physical weblinks.

The information/webpages can eather be downloaded directly to the phone, or bookmarked to your mail, phone, or a friend.

We have examplified this service for community based underground shops(music, art) We imagine that the service may include scanning flyers, listening, or downloadiing recommendations, signing up for mailinglists etc.

Here are some comments to the qt-chapters popupmeny

#2 is an example from a RFID system already implemented in Japan. This is the identification maascot

#3-7 is suggesting a navigation-map in tram stations. By holding your phone over highlighted sectors of the city, the display will show info for venues included in the service, or download info

#8-13 Introducing "window-shopping possibilities. Listening to recomandations, or checking opening hours, signing up for email-services.

#14-16 Technical spesifications. When making contact between tag and phone you will have to make choices between download, mail-bookmark etc. At the same time you may watch basic info on coverart, and track/album-info